Streaming live performances is common place these days. While the technology to deliver the performance has massively improved over the past years, the methods with which to connect the performer with the audience have not undergone the same evolution. The most common place forms of audience/performer interaction in a remote setting remains a standardized chat system, or possibly live poll.

This project aims to explore novel ways of integrating the audience in a performance context, by displaying audience feedback within a performers software interface. We chose to focus on discjockeys (DJs), as audience feedback is key during their performances. DJs rely heavily on direct and indirect feedback from an audience to make informed decisions during their performances, such as which songs to play.

With a remote DJs performance, meaning not in the same location as the audience, a lot of natural feedback disappears, such as the ability to see or hear the audience. This project explored providing DJs in a remote context with direct audience feedback, displayed in the software they used to perform. The user study revealed that while the performers were interested in the feedback they felt less inclined to take it into consideration, compared to a local performance.

Publication and resources

- A Multi-Touch DJ Interface with Remote Audience Feedback

Lasse Farnung Laursen, Masataka Goto, Takeo Igarashi, in ACM MM ’14: The 22nd ACM International Conference on Multimedia, pp. 1225-1228

Published PDF - ACM MM ’14 Poster

- Pecha Kucha Presentation Modern Touch Djing

- PlanMixPlay – Related future performance software

Quick Facts

- Performance software built in C++ in one year. Progenitor project for PlanMixPlay.

- Two formal user studies involving 7 DJs conducted as part of project.

- Four 2-hour live shows also conducted as part of user study, with an average listener-count of 120 on Slayradio.org.

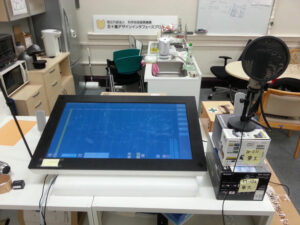

- MT3200 touch-screen used as input device, produced by FlatFrog. The MT3200 was discontinued in 2014.

DJ Interface

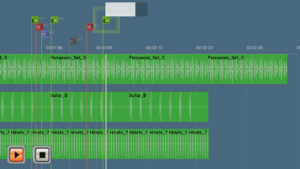

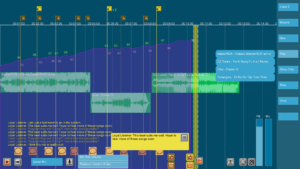

A novel performance interface was developed to support feedback integration as part of this project. The novel interface consists of an audio time-line visualization, with general playback and visualization controls at the bottom and a set of drop-down menus for loading audio files. The entire time-line supports pinch gesture zooming for easy overview or detailed inspection. All loaded audio elements support intuitive drag-and-drop style interaction. Two modes of visualized playback are supported; static, where the time-line is still and the playback line moves, or moving time-line with a static playback line. The former is ideal for planning ahead and setting up audio elements in advance. The latter mode of visualization is a more dynamic ‘on-the-fly’ style interaction mode.

The MT3200 touch-screen is used as an input device. The touch-screen itself supports up to 41 simultaneous touches, as does the DJ interface. The large size of the screen easily allows two users to simultaneously manipulate the time-line and its contained audio elements.

Postmortem

Longer projects, 3-months or more, tend to invite more introspection than shorter ones. Did I make the right design choices? What could I have done better? Regrets are typically a waste of energy, but self-assessment is necessary to improve. We must analyze our past decision-making, lest we fall into the all too familiar trap of thinking we made all the right moves, because we were the ones making them…

From the onset of the project, a small set of design requirements were determined:

- Fast real-time performance – The system must be responsive. Compared to live performance instruments, touch-screens (especially in 2014) were incredibly laggy. Improving input hardware response times was well beyond the scope of the project, so the next best thing was to ensure the software didn’t aggravate the issue by introducing further lag.

- Robust – The system must be stable enough to execute continuously for several hours with user interaction, without crashing.

Given these requirements, I opted to build the software in C++. In hindsight, given the prototypical nature of the project, I wonder if Python or another higher level language would have served better. Python, or similar alternative, would have allowed for faster design iteration meaning more time to test multiple feedback mechanisms. However, Python could have presented functional barriers which would have required low-level programming regardless. E.g., in a recent personal project I got burned a bit by Python and its collection of audio libraries as I was attempting to manually synchronize two audio streams. The audio libraries (at the time) didn’t allow direct writing of data to the audio buffer and thus various workarounds had to be attempted, none of which ended up working satisfactorily.

Another design decision I’ve pondered it the decision to build my own GUI library. In 2012 when the project commenced, only a limited number of GUI libraries for C++ featured touch input for Windows applications. Building my own GUI library taught me a lot, allowed me to seamlessly integrate keyboard-, mouse-, and touch-input, but also turned out to be very time consuming. The input portion alone took at least 3-4 months to reach its final design iteration. In hindsight, I still think this was probably necessary, as adding touch interaction to a GUI library that only supports mouse and keyboard is no small feat. A mouse always represents a singular point of interaction. Touch interaction is akin to one mouse per each supported touch, which in the case of the MT3200 was 41. A well designed GUI library supporting only mouse and keyboard would require substantial refactoring to properly support multiple simultaneous ‘mice’.

Observations and results

Here are the most interesting findings derived from this project in regards to integrated remote audience feedback:

- Preference for comments – The DJs generally preferred comments to likes, as they felt it showed more of an effort on behalf of the audience member.

- Filter use was non-existant – The DJs never filtered any of the feedback at any point during the two-hour shows. However, this is likely due to the interface being very simple overall, thus making the feedback not intrusive.

- Application in a local setting – Some DJs expressed an interest in having this type of feedback available during a local performance.

- No strong differentiation between feedback types – Overall, the DJs did not seem to value the feedback differently depending on the type (gold/bronze).

The participating DJs also made a number of interesting observations:

The feedback was helpful. Particularly if I was doing an online set, that would be really useful. [The feedback] is more direct. If we use a dance floor analogy; People will leave the dance floor [due to fatigue or to get a drink,] not cause they don’t like the music […]. […] So in that way, dancing is kind of an imprecise metric.

– DJ Modality

I wouldn’t do a live [remote] mix, and then get some negative feedback, and […] just switch genres. I might do that at a [local] club.

– Philip Huey

It gives you inspiration and energy. […] It works. I was surprised.

– Mark Jackson

It’s interesting to see how people react and what they do. Will it heavily influence what I do? I don’t think so.

– Matthew Gammon

It would help me understand my audience better, know what they want to hear, which could lead to better parties or sets. That’s important. […] I can see a lot of uses for that.

– DJ Vivid

Gallery

Acknowledgments

This project wouldn’t have come to fruition if it wasn’t for the help of both my supervisors Masataka Goto and Takeo Igarashi, Slaygon and SlayRadio.org, as well as all of the participating DJs.

- Slayradio.org

- Participating DJs

- Phil Huey

- Makoto Nakajima

- Dj Modality

- Mark Jackson

- Matthew Gammon

- Dj Vivid

- Jason Hasai