Texture synthesis fascinates me. Particularly 3D texture synthesis because as opposed to 2D texture synthesis, there isn’t a simple alternative to capturing data. To obtain two dimensional texture data we can simply use a camera. Three dimensional texture data offers no such short-cut.

Most approaches to synthesizing textures can be divided into two types: Parametric and non-parametric. In short, the parametric approach tries to create a mathematical model based on some input (texture exemplar) and then using this mathematical model to create new synthesized textures. Non-parametric is quite a wide term, but these approaches often boils down to short cutting the mathematical model creation step and instead stitch together new textures by re-using data directly from the initial input (texture exemplar).

Publication and resources

- Anisotropic 3D texture synthesis with application to volume rendering

Lasse Farnung Laursen, Bjarne Kjær Ersbøll, Jakob Andreas Bærentzen, in WSCG ’11: Winter School of Computer Graphics 2011, pp. 49-57

Local PDF | Published PDF - Improving texture optimization with application to visualizing meat products

Line Clemmensen, Lasse Farnung Laursen, in Scandinavian Workshop on Imaging Food Quality 2011, pp. 81-86

Local PDF | Published PDF - Automatic Quality Measurement and Parameter Selection for Example-based Texture Synthesis

Lasse Farnung Laursen, Line Clemmensen, Jakob Andreas Bærentzen, Takeo Igarashi, Bjarne Kjær Ersbøll, as internal publication 2012

Local PDF

Quick Facts

- Synthesis code created from scratch in C++.

- Currently unreleased, as it’d need some serious polish. Maybe if there’s enough interest.

- Integral part of Virtual Cuts project.

- Conference and workshop publication

Foundation and goals

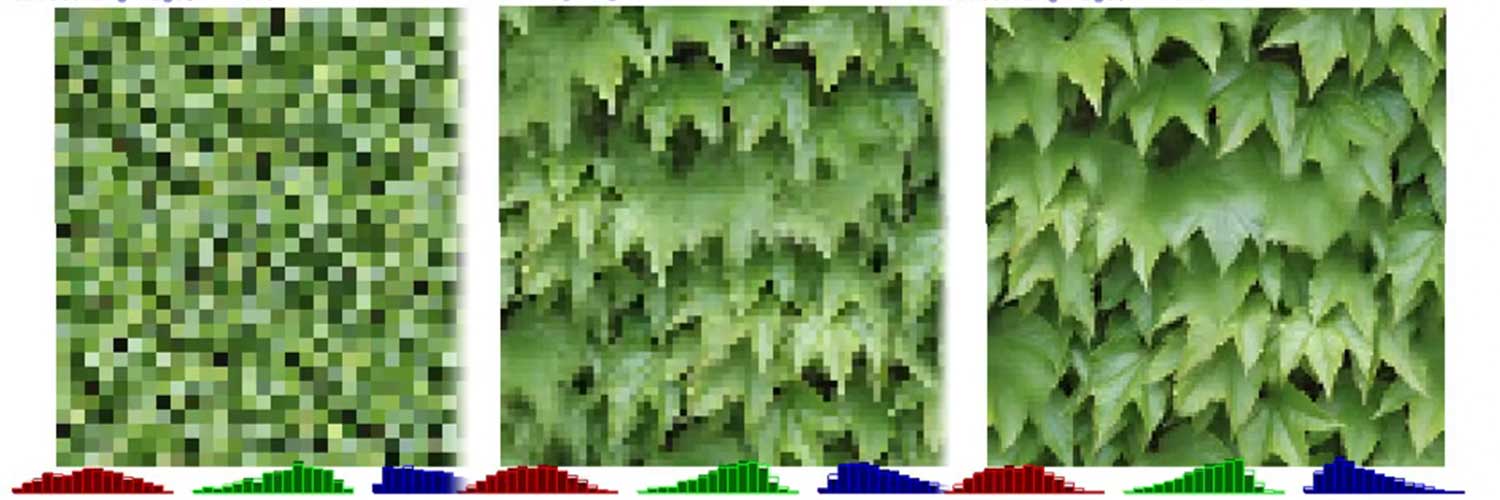

The work featured in this project is heavily based on the research done by Kopf and his fellows. They generate some impressive looking 3D textures in their publication surpassing – at the time – all other contemporary efforts. Their method works with the base assumption that the inside of the texture resembles the outside to some degree. An advanced algorithm is applied which starts with a random set of samples from the original texture, and iteratively improves upon it until it matches more complete parts of the original texture. The videos below show this iterative process in action.

A part of my Ph.D thesis concerned improving the visual quality of a real-time volumetric rendering of pig CT-scanned data. Texturing volume is significantly simplified when the applied texture is 3D, as opposed to 2D.

Anisotropic synthesis

Texturing CT-scanned pig data generally requires three types of tissue to be represented: bone, meat and fat. Actual pig tissue consists of various intermediate tissue types in between meat and fat. Rather than explicitly providing more connective tissue textures, we found that blending two the meat and fat tissues to be sufficient for our needs.

Our research concentrated on using the method devised by Kopf et al. to create anisotropic textures, since pig tissue isn’t particularly uniform. The culmination of our work was published in SCCG ’12 and can be downloaded a link above.

Automating detection of optimal synthesis parameters

Texture synthesis involves numerous parameters. We conducted a series of experiments attempting to semi-automate the detection of optimal settings for these synthesis parameters. We found that various textures would only generate the stellar results in Kopf et al.’s work when specific setting were applied. Our approach can help determine the optimal settings with number of textures, but doesn’t work reliably across the board. The work is thoroughly discussed in our internal technical report which can be downloaded via a link above.

In an effort to improve upon the texture synthesis method, we applied the much faster PatchMatch algorithm and conducted a series of empirical studies. Unfortunately, as the videos show below, the PatchMatch algorithm results in textures that fail to properly recreate the rich diversity of the original texture. Although it might work as an intermediary step in the algorithm, substituting out the complete series of iterations results in unsatisfactory results.